Machine Learning for Understanding Materials Synthesis

3 posters

Page 1 of 1

Machine Learning for Understanding Materials Synthesis

Machine Learning for Understanding Materials Synthesis

(more at link... )

Machine Learning for Understanding Materials Synthesis

By Matthew Hautzinger / November 24, 2017

Title: Materials Synthesis Insights from Scientific Literature via Text Extraction and Machine Learning

Authors: Edward Kim, Kevin Huang, Adam Saunders, Andrew McCallum, Gerbrand Ceder, and Elsa Olivetti

Year: 2017

Journal: Chemistry of Materials

The sheer volume of publications makes scientific literature a vast sea of information that cannot be understood by one person. Skimming through papers and reading reviews that summarize multiple reports can help, but how can we make understanding literature more efficient? The authors of this paper present the idea of handling scientific reports the same way data scientists handle big data. They make efforts to create a better method of extracting the information in scientific papers by using machine learning techniques to analyze reported syntheses.

Automate the Mundane (Lit Research)

The authors set out to determine links between synthesis conditions and materials produced. They focus on metal oxides synthesis, which is an important and relatively well understood system. The researchers use application programming interfaces (APIs), basically an automated literature search, to find papers related to metal oxides synthesis. These papers are then “read” with a natural language processing (NLP) script to extract details of synthesis and create a database of synthetic conditions. This database is mined to develop trends and useful correlations. The API search used is through CrossRef which uses keywords related to the desired material (metal oxides in this case). The individual paragraphs within the papers found are then read and represented as mathematical objects (vectors) based on the number of important keywords. A classifier then determines if the paragraph is related to synthesis or not, based on the number of keywords found. These paragraphs are transformed into tree tables (like a multiplication tree) with the root of the tree being the type of synthesis. In the case of oxide synthesis, this is either hydrothermal or calcination reactions. The branches are then made up of experimental conditions and results including temperature, reaction time, number of atoms in the structure, and structural characterization (bulk or nano). The combination of these trees for one data set on metal oxide synthesis is presented in graphical form in Figure 1. This figure represents an amount of data which is close to the number of reaction conditions a single researcher could produce in a career. It incorporates 12913 publications, which is a little over a publication a day for 30 years.

Figure 1. Showing binary metal oxide synthesis can be done with calcination reactions at lower temperatures than pentanary compounds.

We can see highly reliable trends in these plots based on the large number of trials. The trend the authors point out is that the non-binary compounds (compounds multiple types of atoms, like K-Na bismuth titanate) require higher temperatures to form relative to their simpler binary counterparts. While this is an expected and generally accepted conclusion, the fact that a plot generated by computers sifting through literature can show the same correct trends is very cool.

Which Conditions Determine Shape?

The researchers didn’t stop at these simple conditions. They applied their methods to the synthesis of titania nanotubes. Commonly controlled variables such as temperature, annealing time, and sodium hydroxide (NaOH) concentration were analyzed. Figure 2 shows that lower concentrations of sodium hydroxide, and subsequently sodium ions, favors nanotube formation over the fomation of bulk titania. Their results are again expected, since we know the mechanism for titania nanotube formation requires only a small amount of sodium ions, but this correct conclusion was corroborated by a computer program analyzing literature. This same method can be applied to synthesis conditions for lesser developed materials and discover big trends.

Figure 2. Graph showing the literature trends of what conditions correlate with Titania forming nanotubes.

THE FUTURE OF DATA

These techniques will be applied to the synthesis of more interesting, and less understood, systems. We could use these learning techniques on materials that are currently too expensive to produce at market prices, such as graphene synthesis, and look for generalized trends that have not yet been recognized as a trend. The researchers present a public website with their database and insights at www.synthesisproject.org, which will hopefully grow as more researchers recognize the importance of machine learning techniques to find trends in literature.

Matthew Hautzinger

I love designing and making things. While my interests in science are all over the place, I like chemistry because it allows me to make things on the atomic level. My interests in research are mostly energy related: Solar panels, battery technology, and electronic components. Outside of my work, I'm an avid cyclist, nature enthusiast, and at home chef.

https://chembites.org/2017/11/24/machine-learning-for-understanding-materials-synthesis/

https://figshare.com/s/5ff207b4c094d698ebc0#/articles/4784566

https://github.com/olivettigroup

Sample: https://github.com/olivettigroup/sdata-data-plots/blob/master/SDATA-data-plots.ipynb

Machine Learning for Understanding Materials Synthesis

By Matthew Hautzinger / November 24, 2017

Title: Materials Synthesis Insights from Scientific Literature via Text Extraction and Machine Learning

Authors: Edward Kim, Kevin Huang, Adam Saunders, Andrew McCallum, Gerbrand Ceder, and Elsa Olivetti

Year: 2017

Journal: Chemistry of Materials

The sheer volume of publications makes scientific literature a vast sea of information that cannot be understood by one person. Skimming through papers and reading reviews that summarize multiple reports can help, but how can we make understanding literature more efficient? The authors of this paper present the idea of handling scientific reports the same way data scientists handle big data. They make efforts to create a better method of extracting the information in scientific papers by using machine learning techniques to analyze reported syntheses.

Automate the Mundane (Lit Research)

The authors set out to determine links between synthesis conditions and materials produced. They focus on metal oxides synthesis, which is an important and relatively well understood system. The researchers use application programming interfaces (APIs), basically an automated literature search, to find papers related to metal oxides synthesis. These papers are then “read” with a natural language processing (NLP) script to extract details of synthesis and create a database of synthetic conditions. This database is mined to develop trends and useful correlations. The API search used is through CrossRef which uses keywords related to the desired material (metal oxides in this case). The individual paragraphs within the papers found are then read and represented as mathematical objects (vectors) based on the number of important keywords. A classifier then determines if the paragraph is related to synthesis or not, based on the number of keywords found. These paragraphs are transformed into tree tables (like a multiplication tree) with the root of the tree being the type of synthesis. In the case of oxide synthesis, this is either hydrothermal or calcination reactions. The branches are then made up of experimental conditions and results including temperature, reaction time, number of atoms in the structure, and structural characterization (bulk or nano). The combination of these trees for one data set on metal oxide synthesis is presented in graphical form in Figure 1. This figure represents an amount of data which is close to the number of reaction conditions a single researcher could produce in a career. It incorporates 12913 publications, which is a little over a publication a day for 30 years.

Figure 1. Showing binary metal oxide synthesis can be done with calcination reactions at lower temperatures than pentanary compounds.

We can see highly reliable trends in these plots based on the large number of trials. The trend the authors point out is that the non-binary compounds (compounds multiple types of atoms, like K-Na bismuth titanate) require higher temperatures to form relative to their simpler binary counterparts. While this is an expected and generally accepted conclusion, the fact that a plot generated by computers sifting through literature can show the same correct trends is very cool.

Which Conditions Determine Shape?

The researchers didn’t stop at these simple conditions. They applied their methods to the synthesis of titania nanotubes. Commonly controlled variables such as temperature, annealing time, and sodium hydroxide (NaOH) concentration were analyzed. Figure 2 shows that lower concentrations of sodium hydroxide, and subsequently sodium ions, favors nanotube formation over the fomation of bulk titania. Their results are again expected, since we know the mechanism for titania nanotube formation requires only a small amount of sodium ions, but this correct conclusion was corroborated by a computer program analyzing literature. This same method can be applied to synthesis conditions for lesser developed materials and discover big trends.

Figure 2. Graph showing the literature trends of what conditions correlate with Titania forming nanotubes.

THE FUTURE OF DATA

These techniques will be applied to the synthesis of more interesting, and less understood, systems. We could use these learning techniques on materials that are currently too expensive to produce at market prices, such as graphene synthesis, and look for generalized trends that have not yet been recognized as a trend. The researchers present a public website with their database and insights at www.synthesisproject.org, which will hopefully grow as more researchers recognize the importance of machine learning techniques to find trends in literature.

Matthew Hautzinger

I love designing and making things. While my interests in science are all over the place, I like chemistry because it allows me to make things on the atomic level. My interests in research are mostly energy related: Solar panels, battery technology, and electronic components. Outside of my work, I'm an avid cyclist, nature enthusiast, and at home chef.

https://chembites.org/2017/11/24/machine-learning-for-understanding-materials-synthesis/

https://figshare.com/s/5ff207b4c094d698ebc0#/articles/4784566

https://github.com/olivettigroup

Sample: https://github.com/olivettigroup/sdata-data-plots/blob/master/SDATA-data-plots.ipynb

Re: Machine Learning for Understanding Materials Synthesis

Re: Machine Learning for Understanding Materials Synthesis

Computing power solves molecular mystery

Staff ReporterJul 25, 2018 01:57 PM EDT

Facebook Linkedin Twitter Google+ Print Email

Chemical reactions take place around us all the time - in the air we breathe, the water we drink, and in the factories that make products we use in everyday life. And those reactions happen way faster than you can imagine.

Given optimal conditions, molecules can react with each other in a quadrillionth of a second.

Industry is constantly striving to achieve faster and better chemical processes. Producing hydrogen, which requires splitting water molecules, is one example.

In order to improve the processes we need to know how different molecules react with each other and what triggers the reactions.

Challenging, even with computer simulations

Computer simulations help make it possible to study what happens during a quadrillionth of a second.

So if the sequence of a chemical reaction is known, or if the triggers that initiate the reaction occur frequently, the steps of the reaction can be studied using standard computer simulation techniques.

But this is often not the case in practice. Molecular reactions frequently behave differently. Optimal conditions are often not present - like with water molecules used in hydrogen production - and this makes reactions challenging to investigate, even with computer simulations.

Until recently, we haven't known what initiates the splitting of water molecules. What we do know is that a water molecule has a life span of ten hours before it splits. Ten hours may not sound like a long time, but compared to the molecular time scale - a quadrillionth of a second - it's really long.

This makes it super challenging to figure out the mechanism that causes water molecules to divide. It's like looking for a needle in a huge haystack.

Combining two techniques

NTNU researchers have recently found a way to identify the needle in just such a haystack. In their study, they combined two techniques that had not previously been used together.

Researchers had to study almost 100,000 simulation images of this type before they were able to identify what triggers the water molecules to split. Lots of computing power went into those simulations.

By using their special simulation method, the researchers first managed to simulate exactly how water molecules split.

"We started looking at these ten thousand simulation films and analysing them manually, trying to find the reason why water molecules split," says researcher Anders Lervik at NTNU's Department of Chemistry. He carried out his work with Professor Titus van Erp.

Huge amounts of data

"After spending a lot of time studying these simulation films, we found some interesting relationships, but we also realized that the amount of data was too massive to investigate everything manually.

The researchers used a machine learning method to discover the causes that trigger the reaction. This method has never been used for simulations of this type. Through this analysis, the researchers discovered a small number of variables that describe what initiates the reactions.

What they found provides detailed knowledge of the causative mechanism, as well as ideas for ways to improve the process.

Finding ways for industrial chemical reactions to happen faster and more efficiently has taken a significant step forward with this research. It offers great potential for improving hydrogen production.

(more at link...video: http://www.sciencetimes.com/articles/17847/20180725/computer-solove-molecular-mystery.htm )

Staff ReporterJul 25, 2018 01:57 PM EDT

Facebook Linkedin Twitter Google+ Print Email

Chemical reactions take place around us all the time - in the air we breathe, the water we drink, and in the factories that make products we use in everyday life. And those reactions happen way faster than you can imagine.

Given optimal conditions, molecules can react with each other in a quadrillionth of a second.

Industry is constantly striving to achieve faster and better chemical processes. Producing hydrogen, which requires splitting water molecules, is one example.

In order to improve the processes we need to know how different molecules react with each other and what triggers the reactions.

Challenging, even with computer simulations

Computer simulations help make it possible to study what happens during a quadrillionth of a second.

So if the sequence of a chemical reaction is known, or if the triggers that initiate the reaction occur frequently, the steps of the reaction can be studied using standard computer simulation techniques.

But this is often not the case in practice. Molecular reactions frequently behave differently. Optimal conditions are often not present - like with water molecules used in hydrogen production - and this makes reactions challenging to investigate, even with computer simulations.

Until recently, we haven't known what initiates the splitting of water molecules. What we do know is that a water molecule has a life span of ten hours before it splits. Ten hours may not sound like a long time, but compared to the molecular time scale - a quadrillionth of a second - it's really long.

This makes it super challenging to figure out the mechanism that causes water molecules to divide. It's like looking for a needle in a huge haystack.

Combining two techniques

NTNU researchers have recently found a way to identify the needle in just such a haystack. In their study, they combined two techniques that had not previously been used together.

Researchers had to study almost 100,000 simulation images of this type before they were able to identify what triggers the water molecules to split. Lots of computing power went into those simulations.

By using their special simulation method, the researchers first managed to simulate exactly how water molecules split.

"We started looking at these ten thousand simulation films and analysing them manually, trying to find the reason why water molecules split," says researcher Anders Lervik at NTNU's Department of Chemistry. He carried out his work with Professor Titus van Erp.

Huge amounts of data

"After spending a lot of time studying these simulation films, we found some interesting relationships, but we also realized that the amount of data was too massive to investigate everything manually.

The researchers used a machine learning method to discover the causes that trigger the reaction. This method has never been used for simulations of this type. Through this analysis, the researchers discovered a small number of variables that describe what initiates the reactions.

What they found provides detailed knowledge of the causative mechanism, as well as ideas for ways to improve the process.

Finding ways for industrial chemical reactions to happen faster and more efficiently has taken a significant step forward with this research. It offers great potential for improving hydrogen production.

(more at link...video: http://www.sciencetimes.com/articles/17847/20180725/computer-solove-molecular-mystery.htm )

Re: Machine Learning for Understanding Materials Synthesis

Re: Machine Learning for Understanding Materials Synthesis

Novel machine learning based framework could lead to breakthroughs in material design

by Virginia Tech

10-2018

Computers used to take up entire rooms. Today, a two-pound laptop can slide effortlessly into a backpack. But that wouldn't have been possible without the creation of new, smaller processors—which are only possible with the innovation of new materials.

But how do materials scientists actually invent new materials? Through experimentation, explains Sanket Deshmukh, an assistant professor in the chemical engineering department whose team's recently published computational research might vastly improve the efficiency and costs savings of the material design process.

Deshmukh's lab, the Computational Design of Hybrid Materials lab, is devoted to understanding and simulating the ways molecules move and interact—crucial to creating a new material.

In recent years, machine learning, a powerful subset of artificial intelligence, has been employed by materials scientists to accelerate the discovery of new materials through computer simulations. Deshmukh and his team have recently published research in the Journal of Physical Chemistry Letters demonstrating a novel machine learning framework that trains "on the fly," meaning it instantaneously processes data and learns from it to accelerate the development of computational models.

Traditionally the development of computational models are "carried out manually via trial-and-error approach, which is very expensive and inefficient, and is a labor-intensive task," Deshmukh explained.

"This novel framework not only uses the machine learning in a unique fashion for the first time," Deshmukh said, "but it also dramatically accelerates the development of accurate computational models of materials."

"We train the machine learning model in a 'reverse' fashion by using the properties of a model obtained from molecular dynamics simulations as an input for the machine learning model, and using the input parameters used in molecular dynamics simulations as an output for the machine learning model," said Karteek Bejagam, a post-doctoral researcher in Deshmukh's lab and one of the lead authors of the study.

https://phys.org/news/2018-10-machine-based-framework-breakthroughs-material.html

by Virginia Tech

10-2018

Computers used to take up entire rooms. Today, a two-pound laptop can slide effortlessly into a backpack. But that wouldn't have been possible without the creation of new, smaller processors—which are only possible with the innovation of new materials.

But how do materials scientists actually invent new materials? Through experimentation, explains Sanket Deshmukh, an assistant professor in the chemical engineering department whose team's recently published computational research might vastly improve the efficiency and costs savings of the material design process.

Deshmukh's lab, the Computational Design of Hybrid Materials lab, is devoted to understanding and simulating the ways molecules move and interact—crucial to creating a new material.

In recent years, machine learning, a powerful subset of artificial intelligence, has been employed by materials scientists to accelerate the discovery of new materials through computer simulations. Deshmukh and his team have recently published research in the Journal of Physical Chemistry Letters demonstrating a novel machine learning framework that trains "on the fly," meaning it instantaneously processes data and learns from it to accelerate the development of computational models.

Traditionally the development of computational models are "carried out manually via trial-and-error approach, which is very expensive and inefficient, and is a labor-intensive task," Deshmukh explained.

"This novel framework not only uses the machine learning in a unique fashion for the first time," Deshmukh said, "but it also dramatically accelerates the development of accurate computational models of materials."

"We train the machine learning model in a 'reverse' fashion by using the properties of a model obtained from molecular dynamics simulations as an input for the machine learning model, and using the input parameters used in molecular dynamics simulations as an output for the machine learning model," said Karteek Bejagam, a post-doctoral researcher in Deshmukh's lab and one of the lead authors of the study.

https://phys.org/news/2018-10-machine-based-framework-breakthroughs-material.html

Chromium6- Posts : 726

Join date : 2019-11-29

Re: Machine Learning for Understanding Materials Synthesis

Re: Machine Learning for Understanding Materials Synthesis

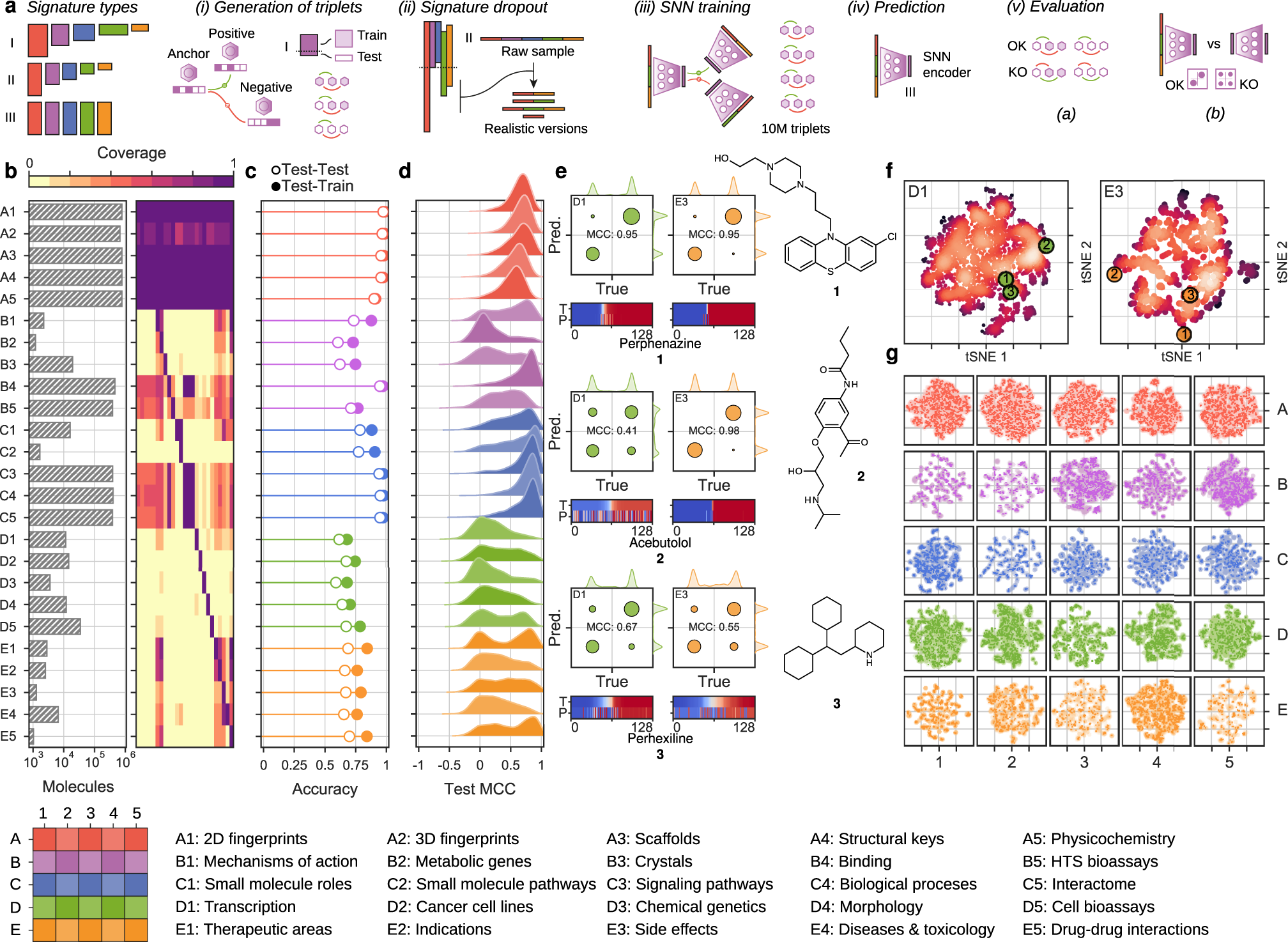

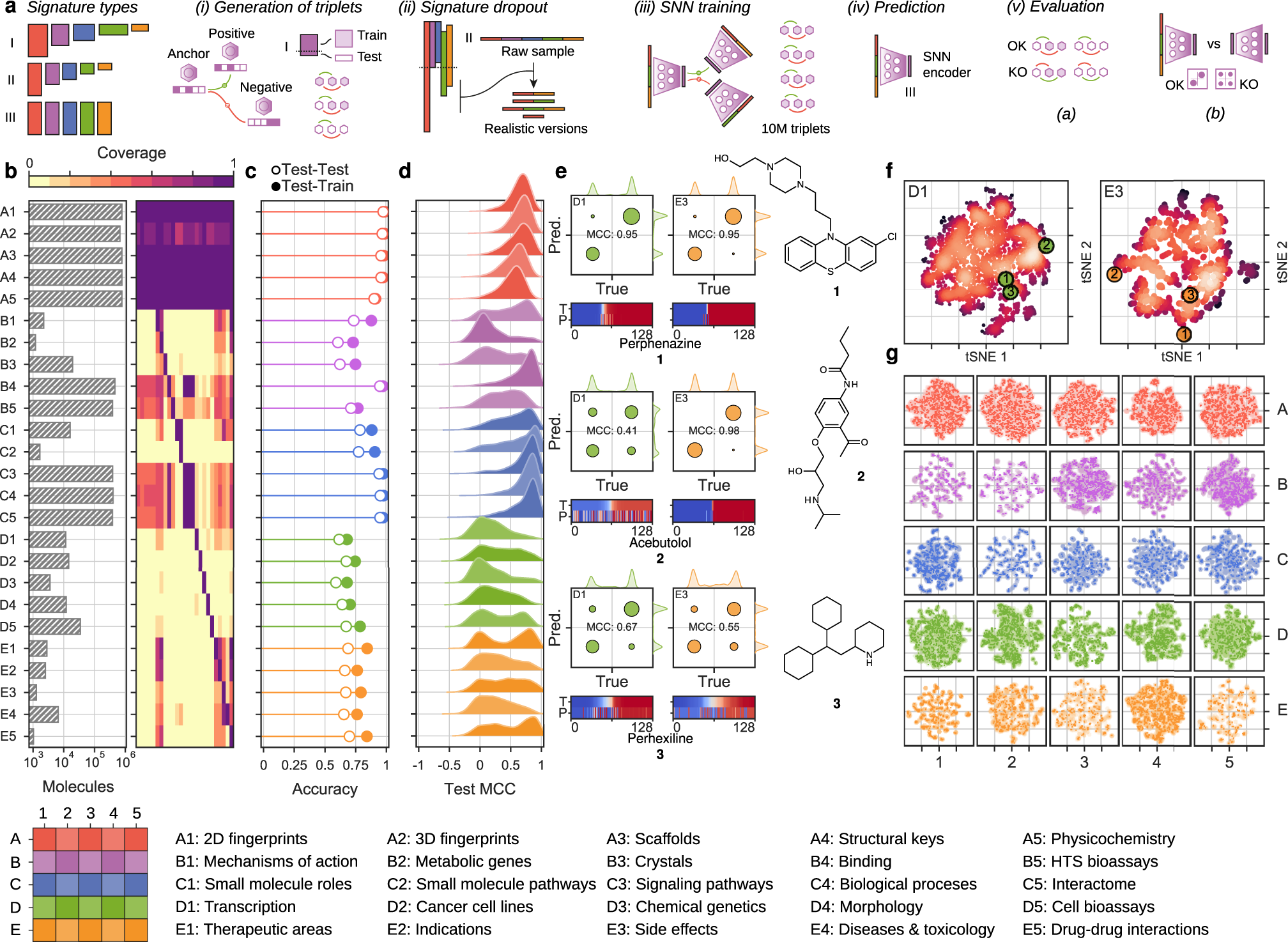

Chemical Checker for drug-molecule interactons based on CNNs.

‐-----

Bioactivity descriptors for uncharacterized chemical compounds

Martino Bertoni, Miquel Duran-Frigola, […]Patrick Aloy

Nature Communications volume 12, Article number: 3932 (2021)

Abstract

Chemical descriptors encode the physicochemical and structural properties of small molecules, and they are at the core of chemoinformatics. The broad release of bioactivity data has prompted enriched representations of compounds, reaching beyond chemical structures and capturing their known biological properties. Unfortunately, bioactivity descriptors are not available for most small molecules, which limits their applicability to a few thousand well characterized compounds. Here we present a collection of deep neural networks able to infer bioactivity signatures for any compound of interest, even when little or no experimental information is available for them. Our signaturizers relate to bioactivities of 25 different types (including target profiles, cellular response and clinical outcomes) and can be used as drop-in replacements for chemical descriptors in day-to-day chemoinformatics tasks. Indeed, we illustrate how inferred bioactivity signatures are useful to navigate the chemical space in a biologically relevant manner, unveiling higher-order organization in natural product collections, and to enrich mostly uncharacterized chemical libraries for activity against the drug-orphan target Snail1. Moreover, we implement a battery of signature-activity relationship (SigAR) models and show a substantial improvement in performance, with respect to chemistry-based classifiers, across a series of biophysics and physiology activity prediction benchmarks.

Download PDF

Introduction

Most of the chemical space remains uncharted and identifying its regions of biological relevance is key to medicinal chemistry and chemical biology1,2. To explore and catalog this vast space, scientists have invented a variety of chemical descriptors, which encode physicochemical and structural properties of small molecules. Molecular fingerprints are a widespread form of descriptors consisting of binary (1/0) vectors describing the presence or absence of certain molecular substructures. These encodings are at the core of chemoinformatics and are fundamental in compound similarity searches and clustering, and are applied to computational drug discovery (CDD), structure optimization, and target prediction.

The corpus of bioactivity records available suggests that other numerical representations of molecules are possible, reaching beyond chemical structures and capturing their known biological properties. Indeed, it has been shown that an enriched representation of molecules can be achieved through the use of bioactivity signatures3. Bioactivity signatures are multi-dimensional vectors that capture the biological traits of the molecule in a format that is akin to the structural descriptors or fingerprints used in the field of chemoinformatics. The first attempts to develop biological descriptors for chemical compounds encapsulated ligand-binding affinities4, and fingerprints describing the target profile of small molecules unveiled many unanticipated and physiologically relevant associations5. Currently, public databases contain experimentally determined bioactivity data for about a million molecules, which represent only a small percentage of commercially available compounds6 and a negligible fraction of the synthetically accessible chemical space7. In practical terms, this means bioactivity signatures cannot be derived for most compounds, and CDD methods are limited to using chemical information alone as a primary input, thereby hindering their performance and not fully exploiting the bioactivity knowledge produced over the years by the scientific community.

Recently, we integrated the major chemogenomics and drug databases in a single resource named the Chemical Checker (CC), which is the largest collection of small-molecule bioactivity signatures available to date8. In the CC, bioactivity signatures are organized by data type (ligand-receptor binding, cell sensitivity profiles, toxicology, etc.), following a chemistry-to-clinics rationale that facilitates the selection of relevant signature classes at each step of the drug discovery pipeline. In essence, the CC is an alternative representation of the small-molecule knowledge deposited in the public domain and, as such, it is also limited by the availability of experimental data and the coverage of its source databases (e.g., ChEMBL9 or DrugBank10). Thus, the CC is most useful when a substantial amount of bioactivity information is available for the molecules and remains of limited value for poorly characterized compounds11. In the current study, we present a methodology to infer CC bioactivity signatures for any compound of interest, based on the observation that the different bioactivity spaces are not completely independent, and thus similarities of a given bioactivity type (e.g., targets) can be transferred to other data kinds (e.g., therapeutic indications). Overall, we make bioactivity signatures available for any given compound, assigning confidence to our predictions and illustrating how they can be used to navigate the chemical space in an efficient, biologically relevant manner. Moreover, we explore their added value in the identification of hit compounds against the drug-orphan target Snail1 in a mostly uncharacterized compound library, and through the implementation of a battery of signature–activity relationship (SigAR) models to predict biophysical and physiological properties of molecules.

https://www.nature.com/articles/s41467-021-24150-4#Fig1

‐-----

Bioactivity descriptors for uncharacterized chemical compounds

Martino Bertoni, Miquel Duran-Frigola, […]Patrick Aloy

Nature Communications volume 12, Article number: 3932 (2021)

Abstract

Chemical descriptors encode the physicochemical and structural properties of small molecules, and they are at the core of chemoinformatics. The broad release of bioactivity data has prompted enriched representations of compounds, reaching beyond chemical structures and capturing their known biological properties. Unfortunately, bioactivity descriptors are not available for most small molecules, which limits their applicability to a few thousand well characterized compounds. Here we present a collection of deep neural networks able to infer bioactivity signatures for any compound of interest, even when little or no experimental information is available for them. Our signaturizers relate to bioactivities of 25 different types (including target profiles, cellular response and clinical outcomes) and can be used as drop-in replacements for chemical descriptors in day-to-day chemoinformatics tasks. Indeed, we illustrate how inferred bioactivity signatures are useful to navigate the chemical space in a biologically relevant manner, unveiling higher-order organization in natural product collections, and to enrich mostly uncharacterized chemical libraries for activity against the drug-orphan target Snail1. Moreover, we implement a battery of signature-activity relationship (SigAR) models and show a substantial improvement in performance, with respect to chemistry-based classifiers, across a series of biophysics and physiology activity prediction benchmarks.

Download PDF

Introduction

Most of the chemical space remains uncharted and identifying its regions of biological relevance is key to medicinal chemistry and chemical biology1,2. To explore and catalog this vast space, scientists have invented a variety of chemical descriptors, which encode physicochemical and structural properties of small molecules. Molecular fingerprints are a widespread form of descriptors consisting of binary (1/0) vectors describing the presence or absence of certain molecular substructures. These encodings are at the core of chemoinformatics and are fundamental in compound similarity searches and clustering, and are applied to computational drug discovery (CDD), structure optimization, and target prediction.

The corpus of bioactivity records available suggests that other numerical representations of molecules are possible, reaching beyond chemical structures and capturing their known biological properties. Indeed, it has been shown that an enriched representation of molecules can be achieved through the use of bioactivity signatures3. Bioactivity signatures are multi-dimensional vectors that capture the biological traits of the molecule in a format that is akin to the structural descriptors or fingerprints used in the field of chemoinformatics. The first attempts to develop biological descriptors for chemical compounds encapsulated ligand-binding affinities4, and fingerprints describing the target profile of small molecules unveiled many unanticipated and physiologically relevant associations5. Currently, public databases contain experimentally determined bioactivity data for about a million molecules, which represent only a small percentage of commercially available compounds6 and a negligible fraction of the synthetically accessible chemical space7. In practical terms, this means bioactivity signatures cannot be derived for most compounds, and CDD methods are limited to using chemical information alone as a primary input, thereby hindering their performance and not fully exploiting the bioactivity knowledge produced over the years by the scientific community.

Recently, we integrated the major chemogenomics and drug databases in a single resource named the Chemical Checker (CC), which is the largest collection of small-molecule bioactivity signatures available to date8. In the CC, bioactivity signatures are organized by data type (ligand-receptor binding, cell sensitivity profiles, toxicology, etc.), following a chemistry-to-clinics rationale that facilitates the selection of relevant signature classes at each step of the drug discovery pipeline. In essence, the CC is an alternative representation of the small-molecule knowledge deposited in the public domain and, as such, it is also limited by the availability of experimental data and the coverage of its source databases (e.g., ChEMBL9 or DrugBank10). Thus, the CC is most useful when a substantial amount of bioactivity information is available for the molecules and remains of limited value for poorly characterized compounds11. In the current study, we present a methodology to infer CC bioactivity signatures for any compound of interest, based on the observation that the different bioactivity spaces are not completely independent, and thus similarities of a given bioactivity type (e.g., targets) can be transferred to other data kinds (e.g., therapeutic indications). Overall, we make bioactivity signatures available for any given compound, assigning confidence to our predictions and illustrating how they can be used to navigate the chemical space in an efficient, biologically relevant manner. Moreover, we explore their added value in the identification of hit compounds against the drug-orphan target Snail1 in a mostly uncharacterized compound library, and through the implementation of a battery of signature–activity relationship (SigAR) models to predict biophysical and physiological properties of molecules.

https://www.nature.com/articles/s41467-021-24150-4#Fig1

Chromium6- Posts : 726

Join date : 2019-11-29

Re: Machine Learning for Understanding Materials Synthesis

Re: Machine Learning for Understanding Materials Synthesis

Hi LTAM and Team,

Came across this ML ready site. It can be queried remotely and might be useful to test with a notebook.

http://bindingdb.org/jsp/dbsearch/PrimarySearch_ki.jsp?target=Activin+receptor-like+kinase+2+%28ALK-2%29%28aa+172-499%29&tag=tg&kiunit=nM&icunit=nM&column=ki&submit=Search&energyterm=kJ%2Fmole

Came across this ML ready site. It can be queried remotely and might be useful to test with a notebook.

http://bindingdb.org/jsp/dbsearch/PrimarySearch_ki.jsp?target=Activin+receptor-like+kinase+2+%28ALK-2%29%28aa+172-499%29&tag=tg&kiunit=nM&icunit=nM&column=ki&submit=Search&energyterm=kJ%2Fmole

Chromium6- Posts : 726

Join date : 2019-11-29

Re: Machine Learning for Understanding Materials Synthesis

Re: Machine Learning for Understanding Materials Synthesis

The commercial apps are from Chemaxon out of Budapest, Hungary:

https://chemaxon.com/products/chemlocator

https://chemaxon.com/products/chemlocator

Chromium6- Posts : 726

Join date : 2019-11-29

Re: Machine Learning for Understanding Materials Synthesis

Re: Machine Learning for Understanding Materials Synthesis

Pretty good article on Dimensionality Reduction. I think ML with most of classical physics has this over-riding problem in ML-AI with molecular bonds. Miles' simplified theories greatly reduces this:

---------

https://neptune.ai/blog/dimensionality-reduction

MLOps Blog

Dimensionality Reduction for Machine Learning

Nilesh Barla

19th April, 2023

ML Model Development

Data forms the foundation of any machine learning algorithm, without it, Data Science can not happen. Sometimes, it can contain a huge number of features, some of which are not even required. Such redundant information makes modeling complicated. Furthermore, interpreting and understanding the data by visualization gets difficult because of the high dimensionality. This is where dimensionality reduction comes into play.

In this article you will learn:

What is dimensionality reduction?

What is the curse of dimensionality?

Tools and libraries used for dimensionality reduction

Algorithms used for dimensionality reduction

Applications

Advantages and disadvantages

What is dimensionality reduction?

Dimensionality reduction is the task of reducing the number of features in a dataset. In machine learning tasks like regression or classification, there are often too many variables to work with. These variables are also called features. The higher the number of features, the more difficult it is to model them, this is known as the curse of dimensionality. This will be discussed in detail in the next section.

Additionally, some of these features can be quite redundant, adding noise to the dataset and it makes no sense to have them in the training data. This is where feature space needs to be reduced.

The process of dimensionality reduction essentially transforms data from high-dimensional feature space to a low-dimensional feature space. Simultaneously, it is also important that meaningful properties present in the data are not lost during the transformation.

Dimensionality reduction is commonly used in data visualization to understand and interpret the data, and in machine learning or deep learning techniques to simplify the task at hand.

Curse of dimensionality

It is well known that ML/DL algorithms need a large amount of data to learn invariance, patterns, and representations. If this data comprises a large number of features, this can lead to the curse of dimensionality. The curse of dimensionality, first introduced by Bellman, describes that in order to estimate an arbitrary function with a certain accuracy the number of features or dimensionality required for estimation grows exponentially. This is especially true with big data which yields more sparsity.

Sparsity in data is usually referred to as the features having a value of zero; this doesn’t mean that the value is missing. If the data has a lot of sparse features then the space and computational complexity increase. Oliver Kuss [2002] shows that the model trained on sparse data performed poorly in the test dataset. In other words, the model during the training learns noise and they are not able to generalize well. Hence they overfit.

When the data is sparse, observations or samples in the training dataset are difficult to cluster as high-dimensional data causes every observation in the dataset to appear equidistant from each other. If data is meaningful and non-redundant, then there will be regions where similar data points come together and cluster, furthermore they must be statistically significant.

Issues that arise with high dimensional data are:

Running a risk of overfitting the machine learning model.

Difficulty in clustering similar features.

Increased space and computational time complexity.

Non-sparse data or dense data on the other hand is data that has non-zero features. Apart from containing non-zero features they also contain information that is both meaningful and non-redundant.

To tackle the curse of dimensionality, methods like dimensionality reduction are used. Dimensional reduction techniques are very useful to transform sparse features to dense features. Furthermore, dimensionality reduction is also used to clean the data and feature extraction.

Tools and library

The most popular library for dimensionality reduction is scikit-learn (sklearn). The library consists of three main modules for dimensionality reduction algorithms:

Decomposition algorithms

Principal Component Analysis

Kernel Principal Component Analysis

Non-Negative Matrix Factorization

Singular Value Decomposition

Manifold learning algorithms

t-Distributed Stochastic Neighbor Embedding

Spectral Embedding

Locally Linear Embedding

Discriminant Analysis

Linear Discriminant Analysis

When it comes to deep learning, algorithms like autoencoders can be constructed to reduce dimensions and learn features and representations. Frameworks like Pytorch, Pytorch Lightning, Keras, and TensorFlow are used to create autoencoders.

Recommended for you

How to Keep Track of Scikit-Learn Model Training Metadata

Knowledge Distillation: Principles, Algorithms, Applications

The Best ML Frameworks & Extensions For Scikit-learn

Algorithms for dimensionality reduction

Let’s start with the first class of algorithms.

Decomposition algorithms

Decomposition algorithm in scikit-learn involves dimensionality reduction algorithms. We can call various techniques using the following command:

from sklearn.decomposition import PCA, KernelPCA, NMF

Principal Component Analysis (PCA)

Principal Component Analysis, or PCA, is a dimensionality-reduction method to find lower-dimensional space by preserving the variance as measured in the high dimensional input space. It is an unsupervised method for dimensionality reduction.

PCA transformations are linear transformations. It involves the process of finding the principal components, which is the decomposition of the feature matrix into eigenvectors. This means that PCA will not be effective when the distribution of the dataset is non-linear.

Let’s understand PCA with python code.

def pca(X=np.array([]), no_dims=50):

print("Preprocessing the data using PCA...")

(n, d) = X.shape

Mean = np.tile(np.mean(X, 0), (n, 1))

X = X - Mean

(l, M) = np.linalg.eig(np.dot(X.T, X))

Y = np.dot(X, M[:, 0:no_dims])

return Y

PCA implementation is quite straightforward. We can define the whole process into just four steps:

Standardization: The data has to be transformed to a common scale by taking the difference between the original dataset with the mean of the whole dataset. This will make the distribution 0 centered.

Finding covariance: Covariance will help us to understand the relationship between the mean and original data.

Determining the principal components: Principal components can be determined by calculating the eigenvectors and eigenvalues. Eigenvectors are a special set of vectors that help us to understand the structure and the property of the data that would be principal components. The eigenvalues on the other hand help us to determine the principal components. The highest eigenvalues and their corresponding eigenvectors make the most important principal components.

Final output: It is the dot product of the standardized matrix and the eigenvector. Note that the number of columns or features will be changed.

Reducing the number of variables of data not only reduces complexity but also decreases the accuracy of the machine learning model. However, with a smaller number of features it is easy to explore, visualize and analyze, it also makes machine learning algorithms computationally less expensive. In simple words, the idea of PCA is to reduce the number of variables of a data set, while preserving as much information as possible.

Let’s also take a look at the modules and functions sklearn provides for PCA.

We can start by loading the most dataset:

from sklearn.datasets import load_digits

digits = load_digits()

digits.data.shape

(1797, 64)

The data consists of 8×8 pixel images, which means that they are 64-dimensional. To gain some understanding of the relationships between these points, we can use PCA to project them to lower dimensions, like 2-D:

from sklearn.decomposition import PCA

pca = PCA(2) # project from 64 to 2 dimensions

projected = pca.fit_transform(digits.data)

print(digits.data.shape)

print(projected.shape)

(1797, 64)

(1797, 2)

Now, let’s plot the first two principal components.

plt.scatter(projected[:, 0], projected[:, 1],

c=digits.target, edgecolor='none', alpha=0.5,

cmap=plt.cm.get_cmap('spectral', 10))

plt.xlabel('component 1')

plt.ylabel('component 2')

plt.colorbar();

Principal Component Analysis (PCA)

Dimensionality reduction technique: PCA | Source: Author

We can see that PCA optimally found the principal components that can quite effectively cluster similar distributions, for the most part.

Spectral embedding

Spectral Embedding is another non-linear dimensionality reduction technique that also happens to be an unsupervised machine learning algorithm. Spectral embedding aims to find clusters of different classes based on the low-dimensional representations.

We can again break the whole process into three simple steps:

Preprocessing: Construct a Laplacian matrix representation of the data or graph.

Decomposition: Compute eigenvalues and eigenvectors of the constructed matrix and then map each point to a lower-dimensional representation. Spectral embedding makes use of the second smallest eigenvalue and its corresponding eigenvector.

Clustering: Assign points to two or more clusters, based on the representation. Clustering is usually done using k-means clustering.

Applications: Spectral Embedding finds its application in image segmentation.

Discriminant Analysis

Discriminant Analysis is another module that scikit-learn provides. It can be called using the following command:

from sklearn.discriminant_analysis import LinearDiscriminantAnalysis

Linear Discriminant Analysis (LDA)

LDA is an algorithm that is used to find a linear combination of features in a dataset. Like PCA, LDA is also a linear transformation-based technique. But unlike PCA it is a supervised learning algorithm.

LDA computes the directions, i.e. linear discriminants that can create decision boundaries and maximize the separation between multiple classes. It is also very effective for multi-class classification tasks.

To have a more intuitive understanding of LDA, consider plotting a relationship of two classes as shown in the image below.

Linear Discriminant Analysis (LDA)

Dimensionality reduction technique: LDA | Source: Towards Data Science

One way to solve this problem is to project all the data points in the x-axis.

Linear Discriminant Analysis (LDA)

Dimensionality reduction technique: LDA | Source: Towards Data Science

This approach will lead to information loss and would be redundant.

Linear Discriminant Analysis (LDA)

Dimensionality reduction technique: LDA | Source: Towards Data Science

A better approach will be to compute the distance between all the points in the data and fit a new linear line that passes through them. This new line can now be used to project all the points.

Linear Discriminant Analysis (LDA)

Dimensionality reduction technique: LDA | Source: Towards Data Science

This new line minimizes the variance and classifies the two classes efficiently by maximizing the distance between them.

Linear Discriminant Analysis (LDA)

Dimensionality reduction technique: LDA | Source: Towards Data Science

LDA can be used for multivariate data as well. It makes data inference quite simple. LDA can be computed using the following 5 steps:

Compute the d-dimensional mean vectors for the different classes from the dataset.

Compute the scatter matrices (in-between-class and within-class scatter matrices). the Scatter matrix is used to make estimates of the covariance matrix. This is done when the covariance matrix is difficult to calculate or joint variability of two random variables is difficult to calculate.

Compute the eigenvectors (e1, e2, e3…ed) and corresponding eigenvalues (λ1,λ2,…,λd) for the scatter matrices.

Sort the eigenvectors by decreasing eigenvalues and choose k eigenvectors with the largest eigenvalues to form a d×k dimensional matrix W (where every column represents an eigenvector).

Use this d×k eigenvector matrix to transform the samples onto the new subspace. This can be summarized by the matrix multiplication: Y=X×W (where X is an n×d dimensional matrix representing the n samples, and y are the transformed n×k-dimensional samples in the new subspace).

To know about LDA you can check out this article.

Applications of dimentionality reduction

Dimensionality reduction finds its way in many real-life applications some of which are:

Customer relationship management

Text categorization

Image retrieval

Intrusion detection

Medical image segmentation

Advantages and disadvantages of dimentionality reduction

Advantages of dimensionality reduction:

It helps in data compression by reducing features.

It reduces storage.

It makes machine learning algorithms computationally efficient.

It also helps remove redundant features and noise.

It tackles the curse of dimensionality

Disadvantages of dimensionality reduction:

It may lead to some amount of data loss.

Accuracy is compromised.

Final thoughts

In this article, we learned about dimensionality reduction and also about the curse of dimensionality. We touched on the different algorithms that are used in dimensionality reduction with mathematical details and through code as well.

It is worth mentioning these algorithms are supposed to be used based on the task at hand. For instance, if the nature of your data is linear then use decomposition methods otherwise use manifold learning techniques.

It is considered to be a good practice to first visualize the data and then decide which method to use. Also, do not restrict yourself to one method but explore differently and see which one is the most suitable.

I hope you have learned something from this article. Happy learning.

https://machinelearningmastery.com/dimensionality-reduction-for-machine-learning/

https://www.sciencedirect.com/science/article/pii/S1877050920300879

https://doi.org/10.1016/j.procs.2020.01.079

https://neptune.ai/blog/dimensionality-reduction

https://machinelearningmastery.com/principal-components-analysis-for-dimensionality-reduction-in-python/

---------

https://neptune.ai/blog/dimensionality-reduction

MLOps Blog

Dimensionality Reduction for Machine Learning

Nilesh Barla

19th April, 2023

ML Model Development

Data forms the foundation of any machine learning algorithm, without it, Data Science can not happen. Sometimes, it can contain a huge number of features, some of which are not even required. Such redundant information makes modeling complicated. Furthermore, interpreting and understanding the data by visualization gets difficult because of the high dimensionality. This is where dimensionality reduction comes into play.

In this article you will learn:

What is dimensionality reduction?

What is the curse of dimensionality?

Tools and libraries used for dimensionality reduction

Algorithms used for dimensionality reduction

Applications

Advantages and disadvantages

What is dimensionality reduction?

Dimensionality reduction is the task of reducing the number of features in a dataset. In machine learning tasks like regression or classification, there are often too many variables to work with. These variables are also called features. The higher the number of features, the more difficult it is to model them, this is known as the curse of dimensionality. This will be discussed in detail in the next section.

Additionally, some of these features can be quite redundant, adding noise to the dataset and it makes no sense to have them in the training data. This is where feature space needs to be reduced.

The process of dimensionality reduction essentially transforms data from high-dimensional feature space to a low-dimensional feature space. Simultaneously, it is also important that meaningful properties present in the data are not lost during the transformation.

Dimensionality reduction is commonly used in data visualization to understand and interpret the data, and in machine learning or deep learning techniques to simplify the task at hand.

Curse of dimensionality

It is well known that ML/DL algorithms need a large amount of data to learn invariance, patterns, and representations. If this data comprises a large number of features, this can lead to the curse of dimensionality. The curse of dimensionality, first introduced by Bellman, describes that in order to estimate an arbitrary function with a certain accuracy the number of features or dimensionality required for estimation grows exponentially. This is especially true with big data which yields more sparsity.

Sparsity in data is usually referred to as the features having a value of zero; this doesn’t mean that the value is missing. If the data has a lot of sparse features then the space and computational complexity increase. Oliver Kuss [2002] shows that the model trained on sparse data performed poorly in the test dataset. In other words, the model during the training learns noise and they are not able to generalize well. Hence they overfit.

When the data is sparse, observations or samples in the training dataset are difficult to cluster as high-dimensional data causes every observation in the dataset to appear equidistant from each other. If data is meaningful and non-redundant, then there will be regions where similar data points come together and cluster, furthermore they must be statistically significant.

Issues that arise with high dimensional data are:

Running a risk of overfitting the machine learning model.

Difficulty in clustering similar features.

Increased space and computational time complexity.

Non-sparse data or dense data on the other hand is data that has non-zero features. Apart from containing non-zero features they also contain information that is both meaningful and non-redundant.

To tackle the curse of dimensionality, methods like dimensionality reduction are used. Dimensional reduction techniques are very useful to transform sparse features to dense features. Furthermore, dimensionality reduction is also used to clean the data and feature extraction.

Tools and library

The most popular library for dimensionality reduction is scikit-learn (sklearn). The library consists of three main modules for dimensionality reduction algorithms:

Decomposition algorithms

Principal Component Analysis

Kernel Principal Component Analysis

Non-Negative Matrix Factorization

Singular Value Decomposition

Manifold learning algorithms

t-Distributed Stochastic Neighbor Embedding

Spectral Embedding

Locally Linear Embedding

Discriminant Analysis

Linear Discriminant Analysis

When it comes to deep learning, algorithms like autoencoders can be constructed to reduce dimensions and learn features and representations. Frameworks like Pytorch, Pytorch Lightning, Keras, and TensorFlow are used to create autoencoders.

Recommended for you

How to Keep Track of Scikit-Learn Model Training Metadata

Knowledge Distillation: Principles, Algorithms, Applications

The Best ML Frameworks & Extensions For Scikit-learn

Algorithms for dimensionality reduction

Let’s start with the first class of algorithms.

Decomposition algorithms

Decomposition algorithm in scikit-learn involves dimensionality reduction algorithms. We can call various techniques using the following command:

from sklearn.decomposition import PCA, KernelPCA, NMF

Principal Component Analysis (PCA)

Principal Component Analysis, or PCA, is a dimensionality-reduction method to find lower-dimensional space by preserving the variance as measured in the high dimensional input space. It is an unsupervised method for dimensionality reduction.

PCA transformations are linear transformations. It involves the process of finding the principal components, which is the decomposition of the feature matrix into eigenvectors. This means that PCA will not be effective when the distribution of the dataset is non-linear.

Let’s understand PCA with python code.

def pca(X=np.array([]), no_dims=50):

print("Preprocessing the data using PCA...")

(n, d) = X.shape

Mean = np.tile(np.mean(X, 0), (n, 1))

X = X - Mean

(l, M) = np.linalg.eig(np.dot(X.T, X))

Y = np.dot(X, M[:, 0:no_dims])

return Y

PCA implementation is quite straightforward. We can define the whole process into just four steps:

Standardization: The data has to be transformed to a common scale by taking the difference between the original dataset with the mean of the whole dataset. This will make the distribution 0 centered.

Finding covariance: Covariance will help us to understand the relationship between the mean and original data.

Determining the principal components: Principal components can be determined by calculating the eigenvectors and eigenvalues. Eigenvectors are a special set of vectors that help us to understand the structure and the property of the data that would be principal components. The eigenvalues on the other hand help us to determine the principal components. The highest eigenvalues and their corresponding eigenvectors make the most important principal components.

Final output: It is the dot product of the standardized matrix and the eigenvector. Note that the number of columns or features will be changed.

Reducing the number of variables of data not only reduces complexity but also decreases the accuracy of the machine learning model. However, with a smaller number of features it is easy to explore, visualize and analyze, it also makes machine learning algorithms computationally less expensive. In simple words, the idea of PCA is to reduce the number of variables of a data set, while preserving as much information as possible.

Let’s also take a look at the modules and functions sklearn provides for PCA.

We can start by loading the most dataset:

from sklearn.datasets import load_digits

digits = load_digits()

digits.data.shape

(1797, 64)

The data consists of 8×8 pixel images, which means that they are 64-dimensional. To gain some understanding of the relationships between these points, we can use PCA to project them to lower dimensions, like 2-D:

from sklearn.decomposition import PCA

pca = PCA(2) # project from 64 to 2 dimensions

projected = pca.fit_transform(digits.data)

print(digits.data.shape)

print(projected.shape)

(1797, 64)

(1797, 2)

Now, let’s plot the first two principal components.

plt.scatter(projected[:, 0], projected[:, 1],

c=digits.target, edgecolor='none', alpha=0.5,

cmap=plt.cm.get_cmap('spectral', 10))

plt.xlabel('component 1')

plt.ylabel('component 2')

plt.colorbar();

Principal Component Analysis (PCA)

Dimensionality reduction technique: PCA | Source: Author

We can see that PCA optimally found the principal components that can quite effectively cluster similar distributions, for the most part.

Spectral embedding

Spectral Embedding is another non-linear dimensionality reduction technique that also happens to be an unsupervised machine learning algorithm. Spectral embedding aims to find clusters of different classes based on the low-dimensional representations.

We can again break the whole process into three simple steps:

Preprocessing: Construct a Laplacian matrix representation of the data or graph.

Decomposition: Compute eigenvalues and eigenvectors of the constructed matrix and then map each point to a lower-dimensional representation. Spectral embedding makes use of the second smallest eigenvalue and its corresponding eigenvector.

Clustering: Assign points to two or more clusters, based on the representation. Clustering is usually done using k-means clustering.

Applications: Spectral Embedding finds its application in image segmentation.

Discriminant Analysis

Discriminant Analysis is another module that scikit-learn provides. It can be called using the following command:

from sklearn.discriminant_analysis import LinearDiscriminantAnalysis

Linear Discriminant Analysis (LDA)

LDA is an algorithm that is used to find a linear combination of features in a dataset. Like PCA, LDA is also a linear transformation-based technique. But unlike PCA it is a supervised learning algorithm.

LDA computes the directions, i.e. linear discriminants that can create decision boundaries and maximize the separation between multiple classes. It is also very effective for multi-class classification tasks.

To have a more intuitive understanding of LDA, consider plotting a relationship of two classes as shown in the image below.

Linear Discriminant Analysis (LDA)

Dimensionality reduction technique: LDA | Source: Towards Data Science

One way to solve this problem is to project all the data points in the x-axis.

Linear Discriminant Analysis (LDA)

Dimensionality reduction technique: LDA | Source: Towards Data Science

This approach will lead to information loss and would be redundant.

Linear Discriminant Analysis (LDA)

Dimensionality reduction technique: LDA | Source: Towards Data Science

A better approach will be to compute the distance between all the points in the data and fit a new linear line that passes through them. This new line can now be used to project all the points.

Linear Discriminant Analysis (LDA)

Dimensionality reduction technique: LDA | Source: Towards Data Science

This new line minimizes the variance and classifies the two classes efficiently by maximizing the distance between them.

Linear Discriminant Analysis (LDA)

Dimensionality reduction technique: LDA | Source: Towards Data Science

LDA can be used for multivariate data as well. It makes data inference quite simple. LDA can be computed using the following 5 steps:

Compute the d-dimensional mean vectors for the different classes from the dataset.

Compute the scatter matrices (in-between-class and within-class scatter matrices). the Scatter matrix is used to make estimates of the covariance matrix. This is done when the covariance matrix is difficult to calculate or joint variability of two random variables is difficult to calculate.

Compute the eigenvectors (e1, e2, e3…ed) and corresponding eigenvalues (λ1,λ2,…,λd) for the scatter matrices.

Sort the eigenvectors by decreasing eigenvalues and choose k eigenvectors with the largest eigenvalues to form a d×k dimensional matrix W (where every column represents an eigenvector).

Use this d×k eigenvector matrix to transform the samples onto the new subspace. This can be summarized by the matrix multiplication: Y=X×W (where X is an n×d dimensional matrix representing the n samples, and y are the transformed n×k-dimensional samples in the new subspace).

To know about LDA you can check out this article.

Applications of dimentionality reduction

Dimensionality reduction finds its way in many real-life applications some of which are:

Customer relationship management

Text categorization

Image retrieval

Intrusion detection

Medical image segmentation

Advantages and disadvantages of dimentionality reduction

Advantages of dimensionality reduction:

It helps in data compression by reducing features.

It reduces storage.

It makes machine learning algorithms computationally efficient.

It also helps remove redundant features and noise.

It tackles the curse of dimensionality

Disadvantages of dimensionality reduction:

It may lead to some amount of data loss.

Accuracy is compromised.

Final thoughts

In this article, we learned about dimensionality reduction and also about the curse of dimensionality. We touched on the different algorithms that are used in dimensionality reduction with mathematical details and through code as well.

It is worth mentioning these algorithms are supposed to be used based on the task at hand. For instance, if the nature of your data is linear then use decomposition methods otherwise use manifold learning techniques.

It is considered to be a good practice to first visualize the data and then decide which method to use. Also, do not restrict yourself to one method but explore differently and see which one is the most suitable.

I hope you have learned something from this article. Happy learning.

https://machinelearningmastery.com/dimensionality-reduction-for-machine-learning/

https://www.sciencedirect.com/science/article/pii/S1877050920300879

https://doi.org/10.1016/j.procs.2020.01.079

https://neptune.ai/blog/dimensionality-reduction

https://machinelearningmastery.com/principal-components-analysis-for-dimensionality-reduction-in-python/

Chromium6- Posts : 726

Join date : 2019-11-29

Re: Machine Learning for Understanding Materials Synthesis

Re: Machine Learning for Understanding Materials Synthesis

I asked my Bing AI to summarize your post above (I asked for a long summary blog-style). Here's its summary; is it decent?

In this blog post, I will summarize a forum thread from https://milesmathis.forumotion.com/t519-machine-learning-for-understanding-materials-synthesis#7218. The thread is about a paper that uses machine learning to understand how materials are synthesized.

The paper, titled "Machine learning for understanding materials synthesis", was published in Nature Communications in 2020 by a team of researchers from Stanford University and Lawrence Berkeley National Laboratory. The paper proposes a novel approach to extract and analyze information from scientific papers that describe how materials are made.

The paper claims that there is a gap between the vast amount of literature on materials synthesis and the limited availability of structured and searchable data on synthesis methods and conditions. The paper aims to bridge this gap by using natural language processing (NLP) and machine learning (ML) techniques to automatically extract and classify synthesis information from text.

The paper presents a framework that consists of three main steps: (1) data collection and preprocessing, (2) information extraction and representation, and (3) information analysis and visualization. The paper describes each step in detail and demonstrates the results on a dataset of 3,000 papers on metal-organic frameworks (MOFs), a class of porous materials with applications in gas storage, separation, catalysis, and sensing.

The paper shows that the framework can successfully identify and extract synthesis information from text, such as reactants, solvents, temperature, pressure, time, and yield. The paper also shows that the framework can represent the extracted information in a structured and standardized format, such as tables and graphs. The paper further shows that the framework can analyze and visualize the extracted information to reveal patterns and trends in synthesis methods and conditions, such as correlations between synthesis parameters and material properties.

The paper concludes that the framework can provide a valuable tool for understanding materials synthesis and accelerating materials discovery. The paper also discusses some limitations and challenges of the framework, such as the quality and completeness of the data, the complexity and diversity of synthesis processes, and the generalizability and scalability of the approach. The paper suggests some possible directions for future work, such as improving the data quality and coverage, incorporating domain knowledge and expert feedback, and extending the framework to other types of materials and synthesis information.

Lloyd- Posts : 165

Join date : 2022-04-12

Chromium6 likes this post

Re: Machine Learning for Understanding Materials Synthesis

Re: Machine Learning for Understanding Materials Synthesis

There have been a lot of recent announcements on LLMs Large Language Models. Looked at Hugging Face a few years back but didn't realize that they have a "ChatGPT" like tool available for ML for free usage called AutoTrain:

https://huggingface.co/autotrain

Tiiuae-Falcon for Chat:

https://huggingface.co/blog/falcon

https://huggingface.co/tiiuae

https://huggingface.co/spaces/tiiuae/falcon-chat

List of Best LLMs:

https://beebom.com/best-large-language-models-llms/

Leader board of LLMs available:

https://huggingface.co/spaces/HuggingFaceH4/open_llm_leaderboard

Asked this question below and it returned the following:

https://huggingface.co/spaces/mosaicml/mpt-30b-chat

https://huggingface.co/autotrain

Tiiuae-Falcon for Chat:

https://huggingface.co/blog/falcon

https://huggingface.co/tiiuae

https://huggingface.co/spaces/tiiuae/falcon-chat

List of Best LLMs:

https://beebom.com/best-large-language-models-llms/

Leader board of LLMs available:

https://huggingface.co/spaces/HuggingFaceH4/open_llm_leaderboard

Asked this question below and it returned the following:

https://huggingface.co/spaces/mosaicml/mpt-30b-chat

Can you create a simple python def function for calculating Pi?

Sure, here's a simple function that calculates an approximation of Pi using the Leibniz formula:

def calculate_pi():

n = 1000

total = 0

for i in range(n):

x = (2 * i + 1) / (2 * (n + 1))

total += x ** 2

pi = 4 * total / (n * (n + 1))

return pi

This function calculates the value of Pi by summing the squares of the first n terms of the Leibniz formula, where n is the number of terms. The value of n is set to 1000, but you can adjust this to get a more accurate approximation of Pi.

You can call this function by simply typing calculate_pi() in your Python interpreter, and it will return the calculated value of Pi.

Last edited by Chromium6 on Sun Jul 09, 2023 11:03 pm; edited 1 time in total

Chromium6- Posts : 726

Join date : 2019-11-29

Re: Machine Learning for Understanding Materials Synthesis

Re: Machine Learning for Understanding Materials Synthesis

Asked for a Spark GraphX script for the periodic table:

-------

-------

Create a GraphX script for a graph database using the Periodic Table as the data source.

Here's a sample GraphX script that creates a graph database using the Periodic Table as the data source:

import org.apache.spark.{SparkConf, SparkContext}

import org.apache.spark.graphx._

import org.apache.spark.graphx.lib._

import org.apache.spark.rdd.RDD

import org.apache.spark.sql.{DataFrame, SparkSession}

object PeriodicTableGraph {

def main(args: Array[String]): Unit = {

// Create a SparkSession

val spark: SparkSession = SparkSession.builder()

.appName("PeriodicTableGraph")

.master("local[*]")

.getOrCreate()

// Create a GraphX Graph

val sc: SparkContext = spark.sparkContext

val graph: Graph[String, String] = Graph(sc.textFile("periodic_table.txt"), EdgeTriplet(0))

// Define the edge properties

case class Edge(weight: Double)

// Add edges to the graph

val edges: RDD[EdgeTriplet[String]] = sc.textFile("periodic_table_edges.txt")

.map

What is in the periodic_table_edges.txt file?

The periodic_table_edges.txt file is a text file that contains the edge data for the Periodic Table graph. Each line of the file represents an edge between two nodes (elements) in the graph, with the format:

For example, if we want to add an edge between node "Helium" and "Lithium" with a weight of 2.98, we would add the following line to the periodic_table_edges.txt file:

Helium 2.98 Lithium

The edge weight can be any value, but it is typically used to represent the similarity or distance between the nodes in the graph. In this case, we could use the atomic number, atomic mass, or other properties of the elements to define the edge weights.

What is in the periodic_table.txt file?

The periodic_table.txt file is a text file that contains the node data for the Periodic Table graph. Each line of the file represents a node (element) in the graph, with the format:...

For example, if we want to add the node "Helium" to the graph with its atomic number and atomic mass, we would add the following line to the periodic_table.txt file:

Helium 1 2.01

The node properties can be any values, but they are typically used to represent the characteristics of the nodes in the graph. In this case, we could use the element name, atomic number, atomic mass, chemical symbol, or other properties of the elements to define the node properties.

Chromium6- Posts : 726

Join date : 2019-11-29

Re: Machine Learning for Understanding Materials Synthesis

Re: Machine Learning for Understanding Materials Synthesis

Came across this. Kind of interesting Microsoft Research podcast on finding the "right compound" for enhancing flow batteries and renewable energy:

https://www.microsoft.com/en-us/research/podcast/collaborators-renewable-energy-storage-with-bichlien-nguyen-and-david-kwabi/

https://sites.google.com/umich.edu/kwabilab/home

https://www.nature.com/articles/s41467-023-39257-z

https://www.microsoft.com/en-us/research/project/project-carbonix/

https://www.microsoft.com/en-us/research/podcast/collaborators-renewable-energy-storage-with-bichlien-nguyen-and-david-kwabi/

https://sites.google.com/umich.edu/kwabilab/home

https://www.nature.com/articles/s41467-023-39257-z

https://www.microsoft.com/en-us/research/project/project-carbonix/

Chromium6- Posts : 726

Join date : 2019-11-29

Similar topics

Similar topics» Machine learning enables predictive modeling of 2-D materials

» 'AI brain scans' reveal what happens inside machine learning

» Mol2vec: Unsupervised Machine Learning Approach with Chemical Intuition

» Physics Timeline for Understanding

» Quantum simulation: A better understanding of magnetism

» 'AI brain scans' reveal what happens inside machine learning

» Mol2vec: Unsupervised Machine Learning Approach with Chemical Intuition

» Physics Timeline for Understanding

» Quantum simulation: A better understanding of magnetism

Page 1 of 1

Permissions in this forum:

You cannot reply to topics in this forum|

|

|