Image Recognition - Tensor Flow

Miles Mathis' Charge Field :: Miles Mathis Charge Field :: The Charge Field Effects on Humans/Animals

Page 1 of 1

Image Recognition - Tensor Flow

Image Recognition - Tensor Flow

Algorithm Accurately Reconstructs Faces From A Monkey’s Brain Waves

By Nathaniel Scharping | June 2, 2017 1:38 pm

http://blogs.discovermagazine.com/d-brief/2017/06/02/brain-waves-faces/

Image Recognition

https://www.tensorflow.org/tutorials/image_recognition

Our brains make vision seem easy. It doesn't take any effort for humans to tell apart a lion and a jaguar, read a sign, or recognize a human's face. But these are actually hard problems to solve with a computer: they only seem easy because our brains are incredibly good at understanding images.

In the last few years the field of machine learning has made tremendous progress on addressing these difficult problems. In particular, we've found that a kind of model called a deep convolutional neural network can achieve reasonable performance on hard visual recognition tasks -- matching or exceeding human performance in some domains.

Researchers have demonstrated steady progress in computer vision by validating their work against ImageNet -- an academic benchmark for computer vision. Successive models continue to show improvements, each time achieving a new state-of-the-art result: QuocNet, AlexNet, Inception (GoogLeNet), BN-Inception-v2. Researchers both internal and external to Google have published papers describing all these models but the results are still hard to reproduce. We're now taking the next step by releasing code for running image recognition on our latest model, Inception-v3.

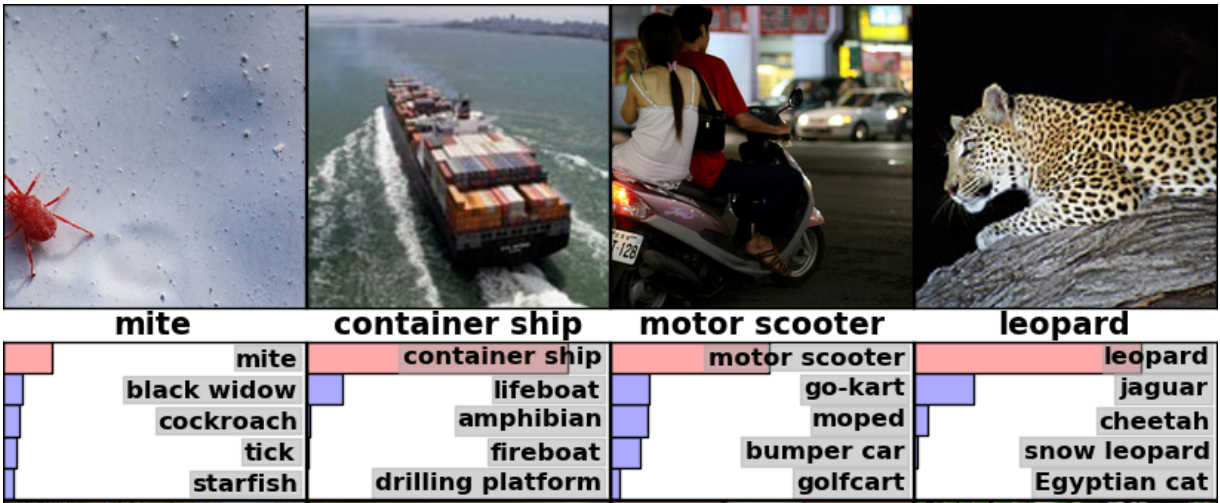

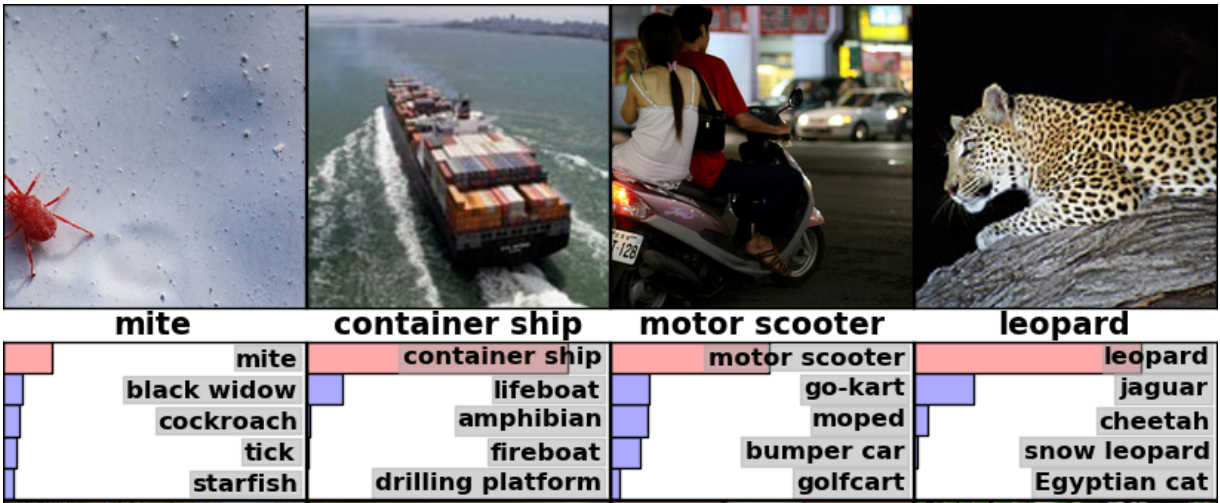

Inception-v3 is trained for the ImageNet Large Visual Recognition Challenge using the data from 2012. This is a standard task in computer vision, where models try to classify entire images into 1000 classes, like "Zebra", "Dalmatian", and "Dishwasher". For example, here are the results from AlexNet classifying some images:

To compare models, we examine how often the model fails to predict the correct answer as one of their top 5 guesses -- termed "top-5 error rate". AlexNet achieved by setting a top-5 error rate of 15.3% on the 2012 validation data set; Inception (GoogLeNet) achieved 6.67%; BN-Inception-v2 achieved 4.9%; Inception-v3 reaches 3.46%.

How well do humans do on ImageNet Challenge? There's a blog post by Andrej Karpathy who attempted to measure his own performance. He reached 5.1% top-5 error rate.

This tutorial will teach you how to use Inception-v3. You'll learn how to classify images into 1000 classes in Python or C++. We'll also discuss how to extract higher level features from this model which may be reused for other vision tasks.

We're excited to see what the community will do with this model.

By Nathaniel Scharping | June 2, 2017 1:38 pm

http://blogs.discovermagazine.com/d-brief/2017/06/02/brain-waves-faces/

Image Recognition

https://www.tensorflow.org/tutorials/image_recognition

Our brains make vision seem easy. It doesn't take any effort for humans to tell apart a lion and a jaguar, read a sign, or recognize a human's face. But these are actually hard problems to solve with a computer: they only seem easy because our brains are incredibly good at understanding images.

In the last few years the field of machine learning has made tremendous progress on addressing these difficult problems. In particular, we've found that a kind of model called a deep convolutional neural network can achieve reasonable performance on hard visual recognition tasks -- matching or exceeding human performance in some domains.

Researchers have demonstrated steady progress in computer vision by validating their work against ImageNet -- an academic benchmark for computer vision. Successive models continue to show improvements, each time achieving a new state-of-the-art result: QuocNet, AlexNet, Inception (GoogLeNet), BN-Inception-v2. Researchers both internal and external to Google have published papers describing all these models but the results are still hard to reproduce. We're now taking the next step by releasing code for running image recognition on our latest model, Inception-v3.

Inception-v3 is trained for the ImageNet Large Visual Recognition Challenge using the data from 2012. This is a standard task in computer vision, where models try to classify entire images into 1000 classes, like "Zebra", "Dalmatian", and "Dishwasher". For example, here are the results from AlexNet classifying some images:

To compare models, we examine how often the model fails to predict the correct answer as one of their top 5 guesses -- termed "top-5 error rate". AlexNet achieved by setting a top-5 error rate of 15.3% on the 2012 validation data set; Inception (GoogLeNet) achieved 6.67%; BN-Inception-v2 achieved 4.9%; Inception-v3 reaches 3.46%.

How well do humans do on ImageNet Challenge? There's a blog post by Andrej Karpathy who attempted to measure his own performance. He reached 5.1% top-5 error rate.

This tutorial will teach you how to use Inception-v3. You'll learn how to classify images into 1000 classes in Python or C++. We'll also discuss how to extract higher level features from this model which may be reused for other vision tasks.

We're excited to see what the community will do with this model.

Similar topics

Similar topics» Constructing a Mathis' Charge Flow Chip?

» Blood flow defies the laws of fluid dynamics

» CELLCUBE - Based on the vanadium redox flow technology

» Creating a Bonding Charge Flow Json schema?

» New method uses heat flow to levitate variety of objects

» Blood flow defies the laws of fluid dynamics

» CELLCUBE - Based on the vanadium redox flow technology

» Creating a Bonding Charge Flow Json schema?

» New method uses heat flow to levitate variety of objects

Miles Mathis' Charge Field :: Miles Mathis Charge Field :: The Charge Field Effects on Humans/Animals

Page 1 of 1

Permissions in this forum:

You cannot reply to topics in this forum